80/20 Reality of AI Design

Minimal AI interfaces are not a suitable design preference at the moment. For a very good reason.

By Hazel Maria Bala

A System Not Yet Ready to Be Quiet

The idea of minimal AI interfaces is seductive. If a system can ingest large volumes of information, detect sentiment shifts, and surface market signals, the human role should narrow considerably. An 80/20 split. Eighty percent intelligence, twenty percent human interaction.

That assumption is directionally correct. It is also premature.

This became evident while working on sentiment-driven systems designed to identify market signals under uncertainty. The initial hypothesis was straightforward: if the model could process news, commentary, and open-source data at scale, the interface should recede. The human would set intent, review synthesized insights, and intervene only when necessary.

In practice, the system required more structure, not less.

The limitation was not computational capacity but judgment. While the models could detect correlations and tonal shifts, they struggled to infer intent, distinguish signal from noise, and determine why a pattern mattered in a specific context. Sentiment alone did not produce insight. Without guidance, the system generated outputs that were fluent but insufficiently framed.

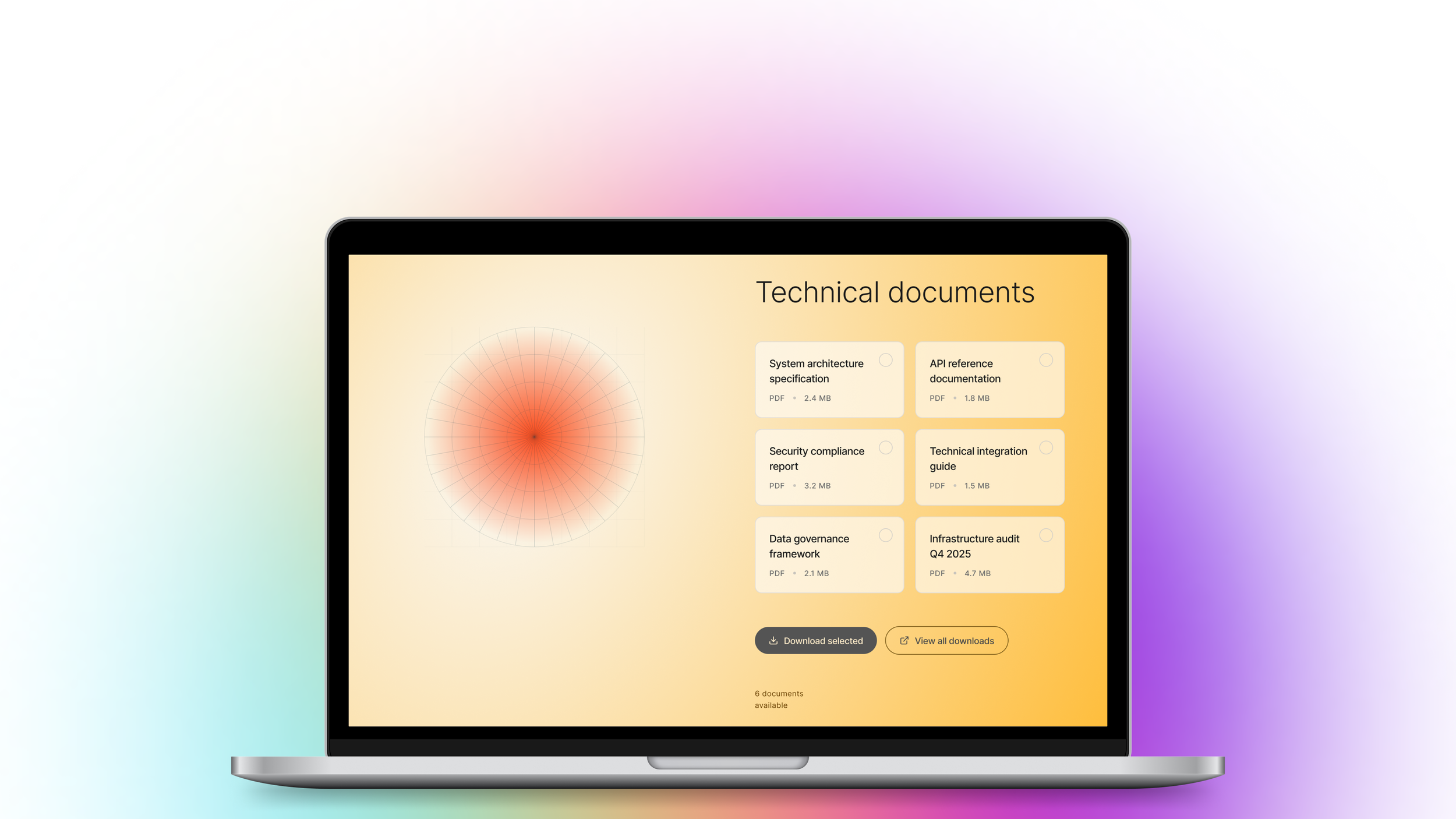

Additional interface elements were introduced, not to operate the system, but to align it. Inputs constrained scope. Controls anchored hypotheses. Mechanisms helped the human direct how insights were extracted rather than passively received. This was not interface inflation. It was epistemic scaffolding.

The dynamic was simple: when intelligence is incomplete, the interface absorbs the uncertainty.

This became especially clear when open-source geopolitical data entered the system. Emotionally charged reporting, accurate in isolation, was aggregated without sufficient contextual grounding. In some cases, the model produced confident causal narratives that exceeded the available evidence. The failure was not visual or interactional. It was one of premature authority.

At that point, the interface became a risk surface.

Once language is presented through a product, it acquires legitimacy. Fluency implies correctness. Confidence implies intent. Silence implies approval. Designers do not control model weights or training data, but they do control how much authority a system is allowed to project.

Here, the role of UI was not to simplify interaction, but to slow the system down. To introduce friction where uncertainty was high. To keep judgment visible and shared, rather than quietly automated away.

This is the distinction often missed in discussions of minimal AI UI. Restraint is not achieved through subtraction. It is achieved through earned trust.

“A system that cannot reliably regulate its own uncertainty has not earned the right to be quiet through minimal design. Eventually it will (and I can’t wait).”

Regulation, Trust, and Organizational Readiness

This question of readiness extends beyond interface design. It’s inseparable from regulation, institutional trust, and organizational maturity.

Regulated environments do not fail because interfaces are too complex. They fail when systems project confidence without accountability. Auditability, data lineage, and explainability are not interface features to be toggled on demand. They are organizational commitments that must be embedded upstream, long before a UI is simplified.

Trust operates the same way. Users do not lose trust because an interface asks too much of them. They lose trust when systems act decisively in moments where uncertainty should have been explicit. Minimal interfaces amplify both competence and failure. Without strong governance, they accelerate risk rather than reduce it.

The future of AI interfaces will be quieter overall and minimal. But that quiet will be conditional. It will emerge only when organizations can demonstrate epistemic discipline, clear decision boundaries, and a willingness to withhold conclusions as deliberately as they generate them.

Until then, interface design remains a mechanism of accountability.